DeGoogling adventures: PeerTube

If you’ve been reading this blog for a while you’ll know that I’m quite keen on the idea of getting Big Tech out of my life as much as possible. If you haven’t, well done – we’re only in the first paragraph and you’ve already caught up.

I’ll be honest: when I say I want to deGoogle, YouTube wasn’t high on my list of priorities. I only upload gaming videos over there, and that’s not really data I’m precious about getting out of their clutches like emails or documents or a calendar would be. On the other hand, after years of 15Mbps VDSL I’m in possession of a shiny new gigabit fibre upload speed, so why not make use of it?

The Fediverse

You may or may not have already heard of the Fediverse. It’s a term for a collection of indie websites which are all connected into a loose federation by the use of the ActivityPub protocol. There are lots of different flavours of Fediverse websites, dictated by the software they’re running. Mastodon is a drop-in replacement for Twitter/X, Friendica for Facebook, Lemmy for Reddit etc. What makes them special is instead of being powered by massive ranks of servers owned by one of the Big Tech giants each server (or “instance”) is independent – like the forums of yesteryear – but you can still interact with people whose accounts are hosted on different servers. If you don’t like your server, you can pack up your data and move to a different one while still being able to interact with the same circle of people. Social media that’s people-first rather than data harvesting first. No venture capital looking to extract value (but please tip your server, because there’s no venture capital injection in the first place either).

I’ve been on Mastodon for a while over at toot.wales, but I thought this weekend I’d have a stab at spinning up my own PeerTube server at home. Unlike text-based social media, hosting video files gets very expensive very fast. I’m uploading hour+ Let’s Play videos at 1440p 60fps which chews up a massive amount of space. This is something I’d only ever be able to afford to do on a self hosted basis, but I do have a half rack of servers and a shiny new gigabit connection. Game on.

The Docker debacle

I like Docker. Containerised applications are great for both security and convenience, but I’m not a CLI purist. While there are many things that a shell is good for like complex file operations that you script on the fly, a GUI is a lot better at actually exposing what’s going on and putting information in front of your eyeballs. I like to run my Docker apps in Portainer, so I copied in the Compose file and the .env file and PeerTube resolutely refused to deploy. Much headscratching and a dive into their documentation later and I discovered that I had to create an Nginx template manually, which seems a little counterintuitive. Why provide a Docker install if it’s not going to automagically populate everything it needs from the compose file and the .env file?

PeerTube deployed

Anyway, I finally got all that straightened out and the app deployed. It’s got that clunky designed-by-engineers feel to it that a lot of Fediverse software shares, where developers and power users have just got so used to the nuts and bolts of how something works that they don’t even notice the UX pitfalls any more.

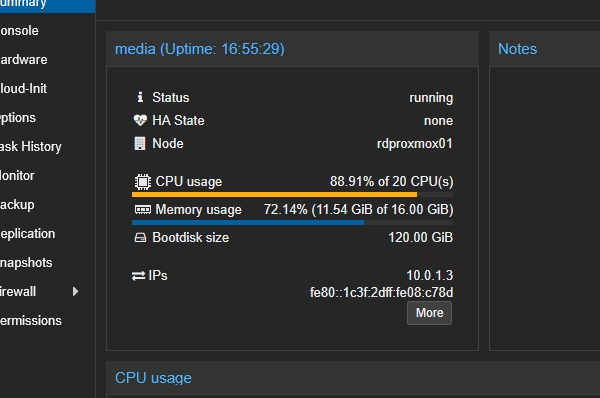

On to my first bugbear: to enable the video resolution picker in the player PeerTube transcodes in advance to a number of preset resolutions, and the transcoding is dog-slow. Out of the box it runs on CPU only, and without installing a plugin to enable hardware acceleration you might be old and grey before you’ve finished importing a video archive of any size. Luckily I only have ~60 videos to upload at the time of writing, but it’s still a bit of a chore. In the meantime I’ve given the server 20 threads to play with.

I’ll probably drop it down to a more reasonable core count once it’s done importing all my existing videos, 20 threads is half the CPU resources of the whole server.

YouTube Sync

PeerTube offers a way to sync a video library from YouTube, adding new videos as they’re published. It uses yt-dlp behind the scenes for this, but I found after a handful of videos it triggered YouTube’s bot protection and failed to download the rest, so I’m forced to upload my videos manually. Again not a show-stopper for me, but maybe instead of using the public video URL they could implement authentication via OAuth or a Google Cloud token so YouTube knows it’s me backing up my videos and not some nefarious scraper. They should know anyway since I’m doing it from the same IP I uploaded the most recent ones from, but apparently that sort of common sense is too much to ask for.

So anyway, that’s the current state of play with woe2youtube. It should catch up to YouTube as and when it manages to finish transcoding the videos I’ve uploaded.

@TCMuffin I really need to figure out GPU acceleration for the transcoding, because ouch.